What direction should you take to start using AI (Large Language Models) directly on your PC.

Running a large language model (LLM) on your own PC is one of the best ways to experience AI without relying on cloud services. Not only do you gain full privacy, but you can also experiment with different models, fine-tune them, and even integrate them into your own apps.

In this guide, I’ll walk you through how to install and run an LLM locally on Windows using a beginner-friendly method.

Understanding What an LLM Is

An LLM (Large Language Model) is an AI program trained on massive amounts of text data to understand and generate human-like language. Models like GPT, LLaMA, and Mistral can handle tasks such as:

- Writing and editing text

- Summarizing information

- Answering questions

- Coding and debugging

When run locally, you’re no longer sending your data to external servers — everything stays on your machine.

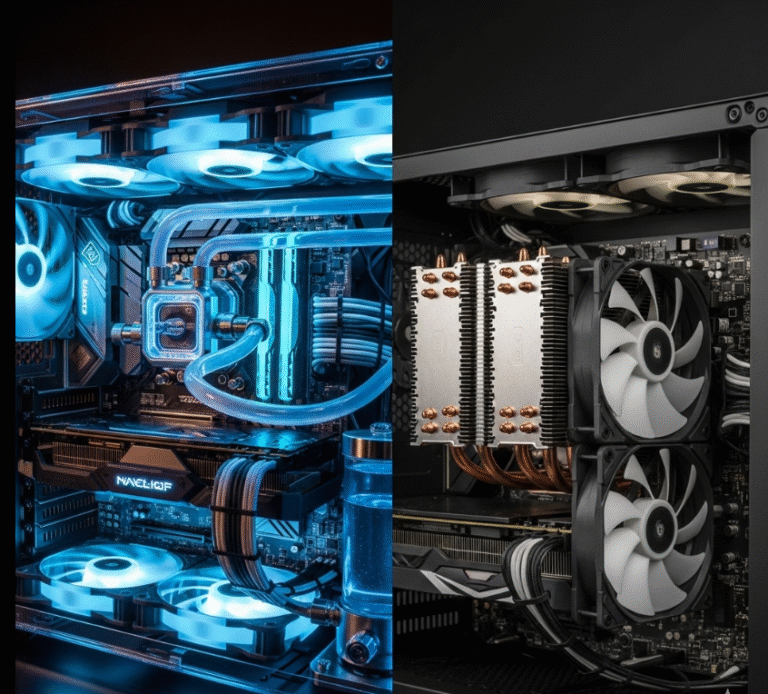

System Requirements

Running an LLM can be resource-heavy. Here’s what you’ll ideally want for smooth performance:

| Component | Recommended |

|---|---|

| CPU | 6+ cores (Intel i5/i7 or AMD Ryzen 5/7) |

| RAM | 16GB minimum (32GB preferred) |

| GPU | NVIDIA with at least 6GB VRAM (for faster inference) |

| Storage | 20GB+ free SSD space |

| OS | Windows 10 or 11 (64-bit) |

Note: You can still run smaller models without a dedicated GPU, but expect slower responses.

Choosing a Model

Some popular models for local use include:

- LLaMA 2 – Meta’s open model, great for general use

- Mistral – Fast and efficient, good for chatbots

- Vicuna – Tuned for conversational AI

- Code LLaMA – Optimized for programming help

For beginners, I recommend starting with a 7B parameter model — smaller and lighter, so it runs smoothly on most modern PCs.

Installing an LLM Locally on Windows

We’ll be using Ollama or LM Studio, two of the easiest tools to set up.

Option 1: Installing with Ollama (Easiest for Beginners)

Step 1: Download Ollama

- Visit https://ollama.ai

- Click Download for Windows and run the installer.

Step 2: Open Ollama

- After installation, open the Ollama terminal (or Windows Command Prompt).

Step 3: Pull Your Model

For example, to download LLaMA 2:

ollama pull llama2

Step 4: Run the Model

ollama run llama2

Type your prompt, and the model will reply right in the terminal.

Option 2: Installing with LM Studio (Graphical Interface)

Step 1: Download LM Studio

- Go to https://lmstudio.ai

- Download the Windows installer and run it.

Step 2: Choose a Model

- In LM Studio, click Models and browse available ones.

- Pick a 7B model for faster performance.

Step 3: Download & Run

- Click Download to get the model files.

- Click Run Model to start chatting.

Tips for Better Performance

- Use a GPU: If you have an NVIDIA GPU, enable GPU acceleration in settings.

- Smaller Models Run Faster: A 7B model is faster than a 13B or 70B model.

- Close Other Programs: Free up RAM before running the model.

- Use Quantized Models: These are compressed versions that use less RAM and storage.

Expanding Your Setup

Once your LLM is running, you can:

- Integrate with Local Apps: Use it in text editors or coding IDEs.

- Host Locally for API Access: Connect it to custom projects.

- Fine-Tune: Train the model with your own data for specific tasks.

Finally

Running an LLM locally on Windows gives you control, privacy, and flexibility. Whether you’re building chatbots, generating content, or experimenting with AI, tools like Ollama and LM Studio make it easy to get started.

If you’re serious about AI development, consider investing in more RAM and a good GPU to unlock larger, more capable models.